The Oracle Problem: QA’s Oldest Pain Point

For decades, the hardest part of automated testing hasn’t been writing tests; it’s been knowing whether the system’s behavior is actually correct.

In software testing, an oracle is any mechanism that tells you whether a given test passed or failed for the right reason. It’s the “expected result” your test framework compares against.

But here’s the catch: most regression suites don’t have true oracles. They rely on assertions that are manually written, brittle, and often incomplete.

If an app’s UI changes or a field name shifts, the test breaks; not because the feature is broken, but because the assertion logic is stale.

And even worse, most regression tests silently pass when they shouldn’t, because the “expected” data hasn’t been updated in months.

This is the Oracle Problem; and it’s one of the quietest forms of technical debt in every QA organization.

But now, something interesting is happening. GPT models are learning how to reason about test output, historical baselines, and business rules in ways that humans never scaled to. And that might just change regression testing forever.

What Is a Regression Oracle, Really?

When we say “regression oracle,” we’re talking about a system that can automatically determine whether a behavior change between builds is acceptable or not.

In other words, given:

- Build A (the last known good version)

- Build B (the new candidate)

- A set of test results, screenshots, or logs

A regression oracle can tell you:

✅ “This difference is intentional (expected)”

❌ “This difference indicates a potential defect”

Traditionally, these oracles are handcrafted: JSON diffs, visual baselines, or approval files. Tools like Jest, Cypress, and Playwright use them all the time. But as applications evolve faster and UI variance grows, these static baselines collapse under their own maintenance cost.

So, QA engineers prune tests, skip flaky ones, or freeze their baselines; trading speed for stability.

Enter GPT.

From Static Baselines to Dynamic Reasoning

Large Language Models (LLMs) like GPT aren’t just text generators; they’re probabilistic reasoning engines. Given structured input (like JSON or API responses) and natural-language context (“expected result: patient address should match record”), GPT can evaluate semantic correctness; not just byte-by-byte equivalence.

Let’s illustrate:

Traditional Oracle:

assert response["status"] == "ACTIVE"

GPT-Enhanced Oracle:

“Compare this API response to the previous version. Determine if the differences are intentional based on business logic, schema version, and changelog context.”

Instead of asserting exact equality, GPT could read both responses and reason:

- “The status field changed from ACTIVE → ENABLED, which aligns with the new schema naming in version 2.3.”

- “New optional field ‘verification_date’ appears; likely valid addition.”

- “Removed ‘is_verified’ flag may cause downstream data issues; flag for human review.”

This isn’t fantasy; it’s the next phase of semantic validation.

GPT doesn’t just check values. It understands intent.

How GPT Builds the Oracle

A working GPT-powered regression oracle typically has four stages:

- Data Capture – Collect test outputs from both builds (logs, screenshots, JSONs, HTML DOMs, etc.).

- Change Diffing – Use lightweight deterministic diffs to highlight what actually changed.

- Context Feeding – Provide GPT with metadata: schema versions, release notes, test descriptions, and risk tags.

- Semantic Evaluation – GPT compares both states, explains differences, and classifies them:

- Expected regression (intentional)

- Unexpected change (potential defect)

- Inconclusive (needs human validation)

When built right, this system becomes a “regression triage AI.”

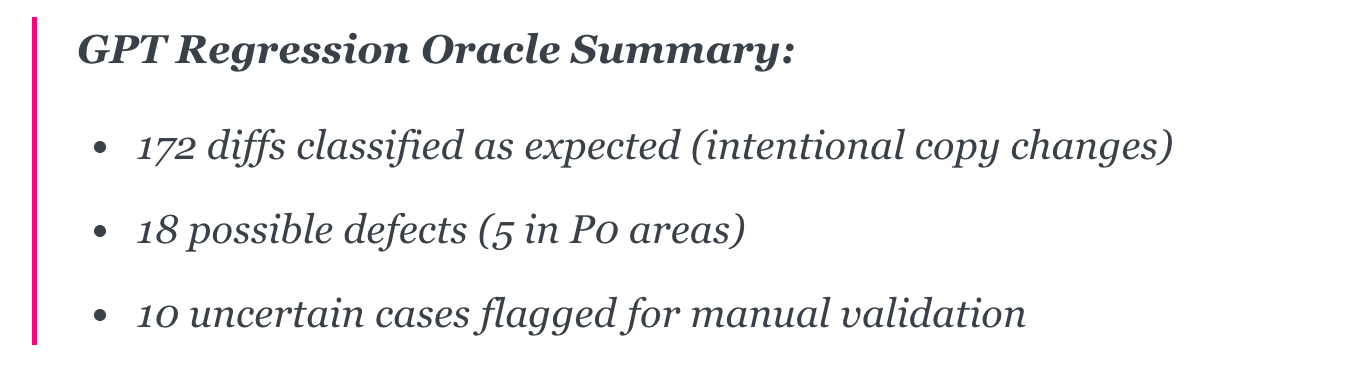

Instead of drowning in 200 failed visual diffs, your QA lead opens Slack to see:

This turns a day of mind-numbing comparison into a 10-minute triage review.

The Benefits: Beyond Test Automation

- Massive Time Savings

Instead of engineers reviewing screenshots or JSON dumps, GPT pre-filters the noise. Review time per regression can drop from hours to minutes. - Reduced False Positives

GPT learns what counts as “noise” (e.g., timestamps, UUIDs, CSS shifts) and stops flagging irrelevant changes. - Cross-Version Intelligence

GPT can use context, like a change-log, to explain differences that stem from legitimate feature updates. - Continuous Learning

As more builds pass through the system, GPT learns the difference between “expected evolution” and “unexpected drift.”

Explainability for Stakeholders

Unlike raw diffs, GPT outputs are readable. Product managers can literally read why a test failed or passed:

“The label ‘Patient Info’ was renamed to ‘Patient Details’ - consistent with Figma update in Sprint 42.”

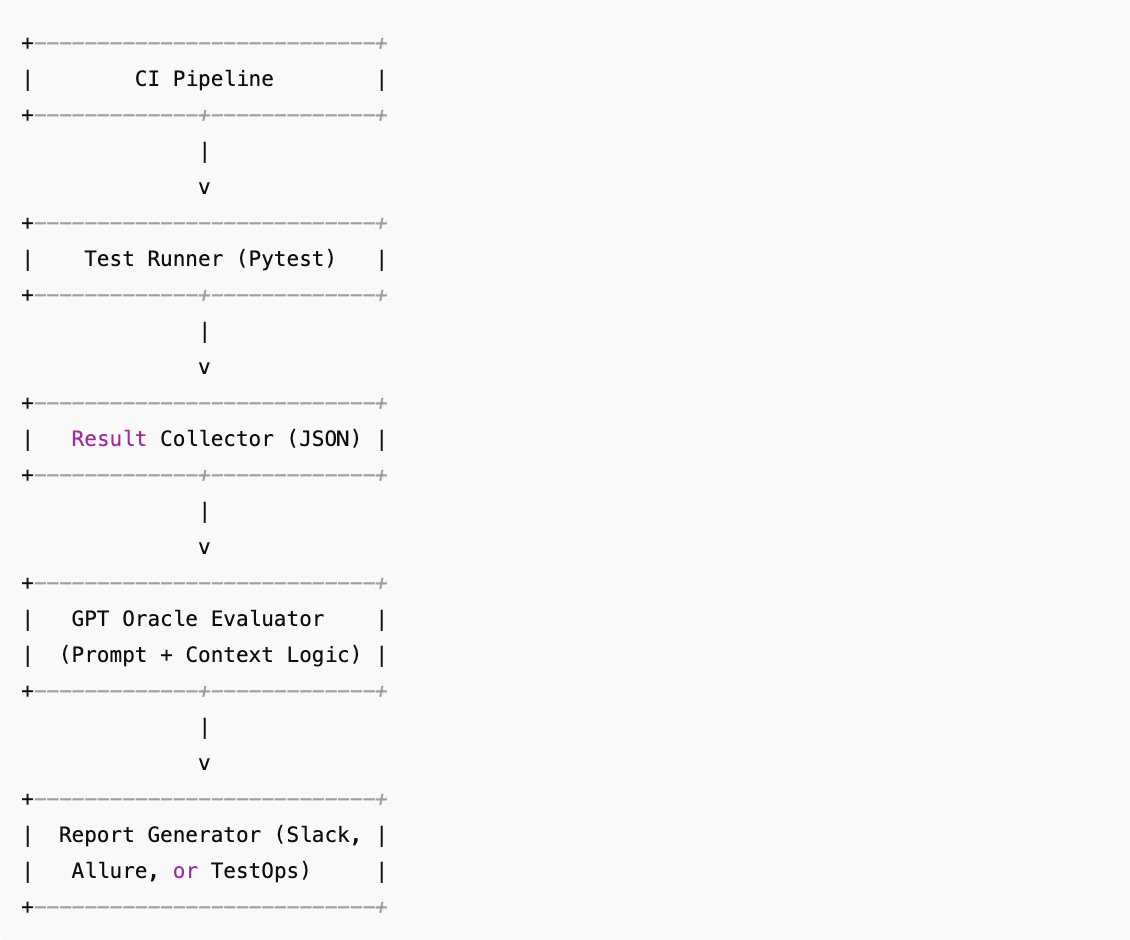

The Architecture: Building a GPT Oracle Layer

You don’t need to rebuild your CI/CD pipeline from scratch. Most GPT regression oracles can be layered onto existing frameworks like Playwright, Pytest, or Cypress.

A simplified architecture looks like this:

The GPT Oracle Evaluator becomes a new stage in your regression pipeline; like a “semantic diff engine.”

In Pytest or Playwright, this can be as simple as:

pytest tests/regression \

--json-report \

&& python tools/gpt_oracle.py reports/report.json

The script collects deltas, generates structured prompts, sends them to GPT (or a fine-tuned local LLM), and outputs a summarized classification file.

Avoiding the “AI Hallucination” Trap

Of course, GPT isn’t perfect. It can hallucinate or misclassify subtle defects. But in practice, this risk can be mitigated with structured prompting and guardrails:

- Feed Both States – Always provide “before” and “after” versions of output, never isolated deltas.

- Constrain with Schema – Include field definitions and type expectations so GPT doesn’t invent properties.

- Use Chain-of-Thought Templates – Force the model to reason step-by-step (“Compare fields → evaluate → conclude”).

- Human-in-the-Loop Review – Any “uncertain” classification automatically routes to manual triage.

In mature systems, GPT isn’t a decision-maker; it’s a triage assistant.

The Future: Continuous Quality Oracles

As teams adopt GPT-driven oracles, the concept evolves from “pass/fail” into probabilistic quality assessment.

Instead of binary outcomes, you’ll see confidence scores:

| Test | Change Summary | GPT Confidence | Classification |

|---|---|---|---|

| API: GetPatient | Field rename | 0.97 | Expected |

| UI: AppointmentCard | Missing icon | 0.81 | Potential defect |

| Data Export | Numeric drift | 0.54 | Inconclusive |

This unlocks entirely new workflows:

- Prioritize human review by risk level.

- Auto-accept low-risk diffs below threshold.

- Track “semantic drift” trends over time.

Eventually, your GPT oracle can become a Quality Memory; a long-term knowledge base that understands your app’s evolution, business rules, and risk landscape.

What This Means for QA Engineers

If you’re a QA leader, this shift doesn’t make your role obsolete; it makes it strategic.

Instead of maintaining brittle baselines, your team becomes curators of test intelligence. You define the rules, train the model’s expectations, and supervise its learning curve.

Think of GPT as your newest junior analyst: fast, tireless, but in need of mentorship.

The best QA engineers won’t be those who write the most tests. They’ll be the ones who teach AI to judge tests correctly.

A Realistic Path Forward

Here’s how you can start experimenting:

- Collect Diff Data – Export regression results from your last few builds (Playwright, Postman, etc.).

- Manually Label a Small Set – Mark each diff as “expected” or “unexpected.”

- Feed It to GPT – Use structured prompts to see how accurately it matches your judgment.

- Integrate via API – Once you trust it, call GPT from your CI after test execution.

- Iterate and Calibrate – Continuously improve prompts and guardrails.

Within a few sprints, you’ll have a lightweight “oracle assistant” catching 70–80 % of noise automatically; and giving your engineers back their time.

A Shift Towards Intelligence Coverage

Regression testing used to be about code coverage and assertion counts.

Now, it’s shifting toward intelligence coverage; how deeply your systems understand the meaning of change.

GPT won’t make QA obsolete. It’ll make it smarter.

By turning every test result into a conversation about intent, GPT finally gives us something that’s been missing for decades: context-aware validation.

And that’s the difference between automation that merely runs; and automation that truly thinks.

👉 Want more posts like this? Subscribe and get the next one straight to your inbox. Subscribe to the Blog or Follow me on LinkedIn

Comments ()