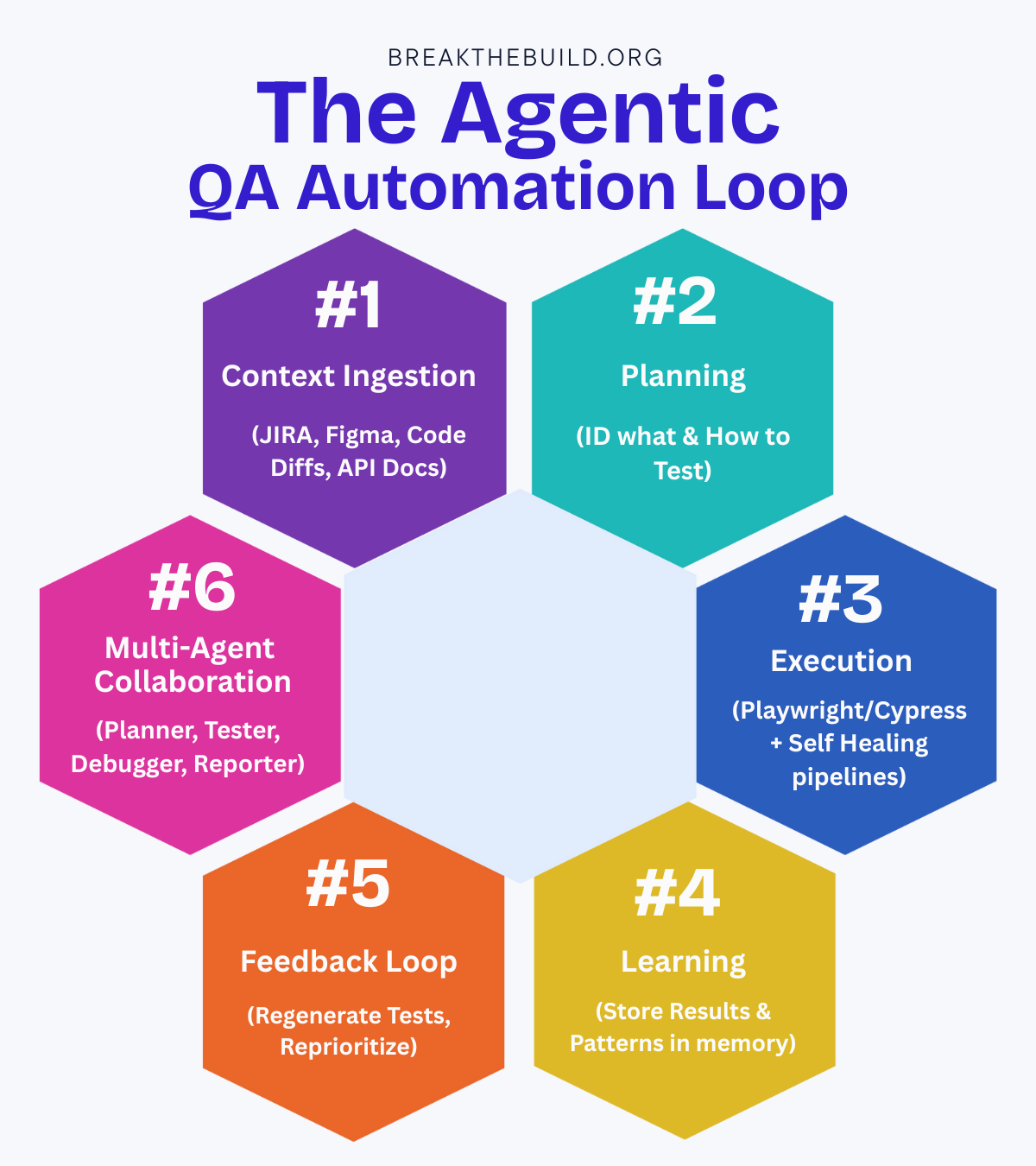

The Agentic QA Automation Loop; A Plain‑English Guide + Starter Blueprint

Why this matters: Traditional automation treats testing as a pile of scripts. Agentic automation treats it as a closed loop that learns from every run, plans the next move, and coordinates specialized “workers” (agents) to deliver higher coverage with less busywork. Below is a practical guide to each step in the loop, plus a lightweight blueprint you can start implementing this week.

What “agentic” actually means

An agent is software that can:

- gather context,

- decide what to do next,

- call tools (e.g., Playwright, Jira APIs), and

- update its memory so it improves over time.

An agentic loop chains several focused agents (planner, tester, debugger, reporter) so the system keeps improving without you micro‑managing every test.

#1 Context Ingestion

(Jira, Figma, code diffs, API docs)

Goal: Give the system the same inputs a human tester would scan before writing tests.

Typical inputs

- Work items: Jira stories/acceptance criteria, linked bugs.

- Designs: Figma frames, component names, flows.

- Code change surface: PR diff, touched routes/components, migration scripts.

- Interfaces: OpenAPI/GraphQL schemas, Postman collections, feature flags.

How it works

- Use small connectors that fetch text/JSON, then normalize into a single document (e.g., Markdown + metadata).

- Chunk and embed those docs into a vector store (Chroma/Weaviate/PGVector) so agents can retrieve only what’s relevant per test.

Outputs

- A Context Pack:

{ story_id, acceptance_criteria[], changed_files[], api_endpoints[], designs[], risk_tags[] } - Links back to Jira/Figma/Repo for traceability.

Gotchas

- Garbage in, garbage out. If acceptance criteria are vague, your plan will be too. Add a tiny “criteria linter” agent that flags ambiguity: missing negative paths, undefined roles, no error states.

#2 Planning

(Identify what to test & how)

Goal: Convert context into a minimal, risk‑weighted test plan.

What the Planner Agent does

- Maps acceptance criteria → test objectives and user journeys.

- Prioritizes by risk (changed code, critical paths, known flaky areas).

- Decides test layers: unit hints for devs, API flows, UI happy/sad paths, contract checks.

- Produces structured artifacts; easy to read and easy for other agents to consume.

Example test plan (trimmed)

{

"story": "HRA-482",

"objectives": [

{"id":"OBJ-1","text":"Member can start HRA from Visits page"},

{"id":"OBJ-2","text":"Status bubble updates from Not Started → In Progress → Completed"}

],

"test_cases": [

{

"id":"TC-001",

"type":"ui",

"priority":"high",

"preconditions":["user role=member","seed visit: 2025-08-01"],

"steps":[

"login()","open('/visits')","click('Start HRA')","assert.url.contains('/hra')"

],

"assertions":[

"bubble('HRA').status == 'In Progress'"

],

"links":{"jira":"HRA-482","figma":"file/…"}

}

]

}

Outputs

- A Test Plan + Coverage Map: criteria ↔ test cases ↔ risk.

Gotchas

- Over‑planning kills velocity. Start with the smallest plan that protects the change, then expand via the feedback loop.

#3 Execution

(Playwright/Cypress + self‑healing pipelines)

Goal: Run the plan reliably, collect rich telemetry, and automatically recover from common failures.

What the Execution Agent does

- Generates or retrieves Playwright/Cypress code from the plan.

- Runs tests in CI, observing console logs, network, trace, and screenshots.

- Applies self‑healing tactics:

- Smart locators (role/label/data‑testid first; CSS/XPath as fallback).

- Retry with stability gates (e.g., wait for network idle, visible & enabled).

- Heuristic wait fixes (detect stale element exceptions; re‑query).

- Auto‑patch selectors if the DOM changed but intent is obvious.

Outputs

- Run artifacts: Playwright trace, videos, screenshots.

- Step‑level telemetry: timings, retries, locator changes used.

- Status per test with reason codes (flake, product bug, env issue).

Gotchas

- Self‑healing should be transparent. Always log what was “healed” and why, so humans can approve or tighten selectors.

#4 Learning

(Store results & patterns in memory)

Goal: Convert every run into institutional knowledge.

What the Learning Agent stores

- Run summaries: pass/fail, duration, primary flake causes.

- Environment fingerprints: versions, feature flags, data seeds.

- Selector stability: which locators are resilient per component.

- Known issues: link tests → Jira bugs, attach failure signatures.

Memory mechanics

- Short‑term memory: last N runs for fast heuristics.

- Long‑term memory: embeddings of failures & fixes so future planners can avoid repeats.

- Optional scoring: Reasoning Fidelity (did the agent follow its own plan?) and Long‑Horizon Consistency (does it make the same good choice across runs?).

Outputs

- A Quality Memory store that informs future plans, prioritization, and self‑healing rules.

#5 Feedback Loop

(Regenerate tests, reprioritize)

Goal: Close the loop: use what we learned to improve the plan and tests.

What happens

- If a test failed for product reasons → open/update Jira with reproduction notes & video.

- If it failed for automation reasons → the system proposes a patch:

- New locator strategy

- Additional wait condition

- Data‑seed fix

- If coverage is thin around a changed area → auto‑generate adjacent cases.

- If runtime is creeping up → re‑rank tests (critical first), shard more aggressively.

Outputs

- Proposed diffs to tests/fixtures.

- Updated risk/priority for the next run.

- A short changelog humans can review.

Gotchas

- Keep a human in the loop for patches that change intent. Agents can propose; engineers approve.

#6 Multi‑Agent Collaboration

(Planner, Tester, Debugger, Reporter)

Goal: Specialize. One “mega‑agent” quickly becomes a mess. A team of small, focused agents is more robust.

Common roles

- Planner: turns context into a plan + coverage map.

- Executor: runs tests + self‑healing tactics.

- Debugger/Critic: clusters failures and proposes fixes.

- Reporter: publishes results to Allure/Jira/Slack, writes a human‑readable summary.

- Coordinator: routes tasks between agents, enforces guardrails (budgets, timeouts, permissions).

Outputs

- Faster cycles, less error‑prone reasoning, clearer logs by responsibility.

Gotchas

- Tool sprawl. Keep a single orchestrator and a clear spec for how agents pass artifacts around (JSON contracts).

A Minimal, Practical Blueprint (you can ship in 1–2 weeks)

Starter stack

- Language: Python (works great with Playwright Python) or Node if your team prefers JS.

- Orchestrator: A small FastAPI/Flask service or a CLI runner.

- Vector store: Chroma or PGVector.

- Artifacts: Allure TestOps (results), S3 for traces, Slack for notifications.

- CI: GitHub Actions with a dedicated self‑hosted runner if you need HIPAA/PHI isolation.

CI trigger (example)

# .github/workflows/agentic-tests.yml

name: Agentic Tests

on:

pull_request:

types: [opened, synchronize, reopened]

jobs:

plan_and_test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v5

with: { python-version: '3.11' }

- name: Install deps

run: pip install -r qa-agents/requirements.txt

- name: Context → Plan

run: python qa-agents/main.py plan --pr ${{ github.event.pull_request.number }}

- name: Execute plan

run: python qa-agents/main.py run --plan .agentic/plan.json

- name: Publish results

run: python qa-agents/main.py report --plan .agentic/plan.json

Orchestrator skeleton (Python)

# qa-agents/main.py (trimmed)

from agents import Planner, Executor, Critic, Reporter, Memory

mem = Memory(db_url="sqlite:///qa_memory.db")

def plan(pr_number: int):

context = mem.ingest_from_sources(pr=pr_number, jira=True, figma=True, openapi=True)

test_plan = Planner(mem).create_plan(context)

mem.save_plan(test_plan, path=".agentic/plan.json")

def run(plan_path: str):

results = Executor(mem).run_plan(plan_path)

mem.save_run(results)

def report(plan_path: str):

results = mem.last_run()

Critic(mem).propose_fixes(results, plan_path)

Reporter(mem).to_allure_and_slack(results, plan_path)

if __name__ == "__main__":

# argparse omitted for brevity

pass

Self‑healing tactic (Playwright Python example)

# smart_click.py

from playwright.sync_api import Page

PREFERRED = ["role=", "data-testid=", "aria-label=", "text="]

def smart_click(page: Page, *selectors: str):

errors = []

for sel in selectors:

try:

page.get_by_role(sel) if sel.startswith("role=") else page.locator(sel)

page.locator(sel.replace("role=","")).click(timeout=5000)

return

except Exception as e:

errors.append(str(e))

# fallback: re-query by text if DOM shifted

try:

page.wait_for_load_state("networkidle", timeout=5000)

page.get_by_text(selectors[-1]).first.click()

return

except Exception as e:

errors.append(str(e))

raise AssertionError(f"smart_click failed. Tried {selectors}. Errors: {errors}")

Agent contracts (keep them boring)

{

"TestCase": {

"id": "TC-001",

"type": "ui|api|contract",

"priority": "high|med|low",

"preconditions": ["..."],

"steps": ["..."],

"assertions": ["..."],

"data": {"seed":"fixture-hra.json"},

"links": {"jira":"HRA-482","figma":"..."}

}

}

Governance, Safety, and Cost Controls

- Guardrails: Limit which tools each agent can call. Principle of least privilege for API tokens. Hard timeouts per step.

- Determinism where it counts: Freeze prompts for critical flows; require human approval for code diffs that change test intent.

- Observability: Push per‑step logs and agent decisions into Allure or an ELK stack. You should be able to reconstruct why a test changed.

- Budgets: Per‑PR token and runtime caps. The coordinator enforces them and fails fast with a readable message.

What to Measure (so the loop actually improves)

- MTTR for red builds: How quickly do agents cluster failures, propose fixes, and get you green again?

- Flake rate & top signatures: Are the same selectors/flows still noisy after a week?

- Coverage growth near change surface: Are we adding relevant tests where code changed?

- Reasoning Fidelity: % of runs where the Executor followed the Planner’s steps without unapproved improvisation.

- Long‑Horizon Consistency: Does the system make the same good choice across environments/releases?

Common Anti‑Patterns (and quick fixes)

- Mega‑Agent syndrome: Split roles; make artifacts first‑class (JSON plans, run reports).

- Opaque healing: Always log the “heal” and keep diffs small. If it can’t be explained, don’t auto‑merge it.

- Plan bloat: Cap plan size per PR (e.g., 8 cases). Use nightly to expand.

- Weak context: Add a simple “criteria linter” and a PR check that blocks if acceptance criteria are missing.

Rollout Plan You Can Copy

Week 1

- Wire Context Ingestion: Jira + PR diffs → Context Pack.

- Ship Planner v0 to produce a small plan JSON for each PR.

- Execute with your existing Playwright suite; don’t generate code yet.

Week 2

4. Add self‑healing helpers + richer telemetry (trace/video).

5. Store results into a lightweight memory DB; show a weekly “top flakes” report.

6. Introduce Critic/Reporter agents; ship Slack summaries with links to Allure + Jira.

Weeks 3–4 (optional)

7. Turn on code generation for adjacent tests only (humans approve).

8. Add a nightly “growth” job to explore more cases while PR runs stay lean.

Not Replacing; Elevating

Agentic automation isn’t about replacing engineers; it’s about elevating them. Let the loop handle the grind; context wrangling, plan scaffolding, investigation; so humans can make the right calls faster and spend time on the hard stuff: risk, architecture, and quality strategy.

👉 Want more posts like this? Subscribe and get the next one straight to your inbox. Subscribe to the Blog or Follow me on LinkedIn

Comments ()