From Power Plants to Chatbots: How AI Really Works

When you ask an AI a question; whether it’s ChatGPT, Claude, or something running on your phone; it feels like magic. But under the hood, there’s nothing magical about it.

It’s an enormous industrial stack, stretching from giant warehouses full of computers all the way down to the tiny math operations inside a neural network.

Let’s break it down layer by layer, so anyone; from a senior engineer to someone just curious; can see how this works.

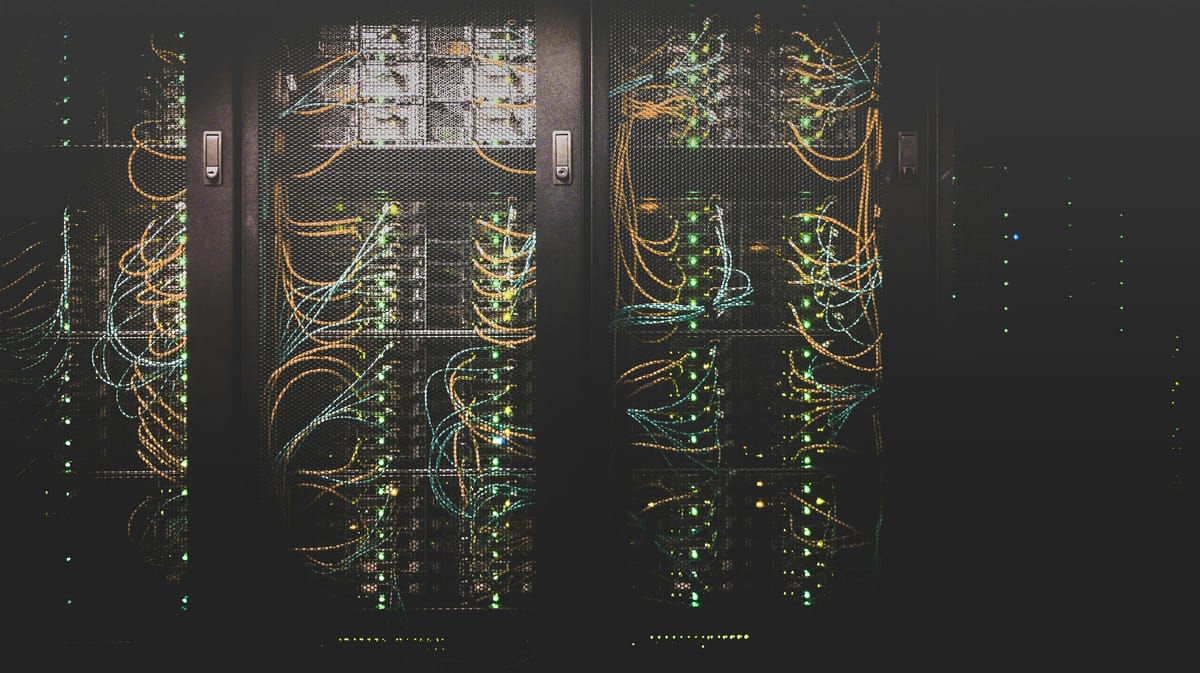

1. The Foundations: Data Centers

Think of AI as a factory, and the factory floor is the data center.

- Power: Training a big AI model consumes tens of megawatts of electricity. (That’s enough to power a small town.) That’s why these facilities are often built near cheap renewable energy sources; hydro in Oregon, solar in Nevada, even nuclear in France.

- Cooling: All those chips run hot. Imagine thousands of space heaters crammed together. Data centers use everything from massive fans to liquid cooling pipes to keep them from melting down.

- Networking: Inside these warehouses, racks of machines need to talk to each other at blinding speeds. Special cabling and switches keep delays to microseconds.

- Storage: Training AI means feeding it petabytes of data (that’s millions of gigabytes). High-speed SSDs act as the “fridge” that keeps the ingredients ready to serve.

Without this physical backbone, nothing else happens.

2. The Muscles: Servers & Chips

Inside each rack are servers built specifically for AI.

- GPUs (Graphics Processing Units): Originally for video games, now the workhorses of AI. They’re great at crunching giant blocks of math in parallel.

- CPUs: Still there, but mainly for orchestration; keeping things organized.

- Specialized AI chips: Google has TPUs, startups are building wafer-sized processors, and NVIDIA is shipping GPUs that cost as much as luxury cars.

- Networking cards: Like the nervous system, letting thousands of GPUs share the load without bottlenecks.

Think of GPUs as engines. A single one is powerful, but modern AI requires fleets of them yoked together like draft horses.

3. The Operating System for AI: Software Infrastructure

If you have a thousand GPUs, you need to make them act like one brain. That’s where software comes in.

- Cluster Managers: Systems like Kubernetes or SLURM decide which jobs run where.

- Frameworks: PyTorch, TensorFlow, and JAX let researchers define the math of their models.

- Parallelism:

- Data parallelism: split the data across machines.

- Model parallelism: split the model itself.

- Pipeline parallelism: like an assembly line.

Behind the scenes, libraries like NVIDIA’s cuDNN handle the nitty-gritty math, so researchers can focus on model design instead of low-level details.

4. The Brain: Neural Networks

At the top of the software stack sit the models themselves.

- Neurons & Layers: Each neuron is just a math function. Stack enough of them and you get layers that can learn patterns in data.

- Training: The process is like teaching a child; the model makes guesses, sees where it’s wrong, and adjusts millions (or billions) of internal weights.

- Transformers: Invented in 2017, this architecture changed everything. Instead of processing words one at a time, transformers look at the whole context at once. That’s what makes large language models like GPT possible.

In short: transformers gave machines a way to pay attention; and that unlocked today’s AI boom.

5. The Fuel: Data Pipelines

AI brains are useless without food. The food is data.

- Collection: Text, images, audio, code.

- Cleaning: Remove duplicates, junk, or harmful content.

- Tokenization: Break raw data into “tokens;" the small chunks models actually understand.

- Sharding: Chop giant datasets into pieces so thousands of GPUs can digest them at once.

Think of this as the grocery supply chain that keeps the AI factory running.

6. The Delivery: Inference & Serving

Once an AI model is trained, it still has to answer your questions quickly. That’s called inference.

- Optimization: Models get slimmed down (quantization, pruning) to run faster.

- Inference servers: Specialized software that takes your prompt, runs it through the model, and spits out an answer.

- Scaling: Load balancers and autoscaling ensure millions of users can hit the system at once without it crashing.

- Edge AI: Smaller versions of these models now run directly on your laptop or phone.

That’s how your chatbot answers in seconds; without making you wait for the equivalent of a $100M training run.

7. The Economics (Why It’s So Expensive)

Here’s the reality check:

- Training a GPT-4-sized model costs tens to hundreds of millions of dollars.

- The GPUs that run it can cost $30,000+ each.

- Every single question you ask an LLM burns measurable electricity; 10–100x more than a Google search.

This is why every AI company cares so much about efficiency.

8. The Road Ahead

Where is all this going?

- More efficient models (smaller, faster, cheaper).

- New hardware (optical chips, neuromorphic computing).

- Cleaner energy (AI powered by renewables).

- Agentic AI (systems that can act, not just answer).

- On-device AI (your phone as an AI node, not just the cloud).

AI is still in its early “steam engine” era. The factories are huge, the machines are expensive, but the breakthroughs are happening fast.

Something to ponder...

The next time you type a question into an AI, remember:

Behind that smooth, instant reply is a chain stretching from power plants → data centers → GPUs → neural networks → your screen.

It’s not magic.

It’s the most complex supply chain for intelligence humanity has ever built.

👉 Want more posts like this? Subscribe and get the next one straight to your inbox. Subscribe to the Blog or Follow me on LinkedIn

Comments ()