Fighting the Flake: Building a Smarter System to Identify and Triage Flaky Tests in CI

Every engineering team eventually runs into the same silent killer of velocity: flaky tests. They fail one minute, pass the next, and often consume hours of triage time with no clear root cause. Teams either ignore them (“oh, that one always fails once in a while”) or over-invest in debugging, only to discover the failure had nothing to do with the product at all.

Flakes erode trust in CI pipelines. They slow down releases, distract engineers, and make quality teams look like gatekeepers instead of accelerators. The bigger your test suite, the worse the problem becomes.

What if there was a systematic way to classify, tag, and score flakes automatically from your CI logs? Instead of being trapped in the reactive cycle of “rerun until green,” you’d have a structured approach to diagnosis; one that tells you not just what failed, but why it’s likely flaky and what to do about it.

In this article, I’ll walk through a framework for building exactly that: a CI log tagging and scoring system that helps QA teams identify flaky tests, assign them a rubric-based severity score, and decide on actions; from quick fixes to isolating them from automation entirely.

The Pain of Flaky Tests

Before building a solution, it’s worth clarifying why flaky tests are uniquely painful.

- They waste time. Engineers spend hours investigating red builds that turn out to be false alarms.

- They mask real issues. If every other failure is flaky, you can’t trust CI to catch true regressions.

- They damage culture. Teams start to distrust the pipeline, rerun tests until they pass, and eventually ship code without confidence.

- They grow like weeds. Once tolerated, they multiply, because no one has time to pull them out at the root.

Most teams rely on anecdote (“that test is flaky”) or tribal knowledge (“don’t trust the checkout test on Safari”). Rarely do they have a structured system to identify flakes at scale.

The Core Idea: Tagging CI Logs

At the heart of this approach is simple pattern recognition. Every flaky failure has fingerprints in the logs. Maybe it’s:

- Infrastructure-related: timeout, socket reset, resource unavailable.

- Network-related: DNS lookup failed, connection refused.

- Product-related: race conditions, async waits, environment mismatch.

- Data-related: test data not seeded, cleanup collision.

- Third-party dependencies: API rate-limits, sandbox failures.

By parsing CI logs and tagging failures with a taxonomy of common patterns, you can begin to classify flakes automatically.

Think of it like spam filters in email: at first, you build rules based on obvious patterns (“contains the phrase ECONNRESET”), and over time, you refine the taxonomy into categories that make sense for your domain.

Step 1: Define a Taxonomy of Flakes

Start with the most common failure buckets. For example:

- Infra – container failed, disk space, machine crash, CI runner timeout.

- Network – socket hangup, DNS errors, HTTP 503s.

- Product/UI – race conditions in frontend waits, animation delays, element not clickable.

- Test Harness – bad mocks, incorrect test data setup, clock skew.

- Third-Party – Stripe sandbox outage, S3 bucket auth issues.

This taxonomy is not static; you’ll evolve it as you encounter new recurring failures. The point is to have a starting set of labels that you can attach to each log line that indicates a flaky signature.

Step 2: Build a Parser

Next, build a lightweight parser that consumes raw CI logs and runs regex/string-matching rules.

- If it sees

ECONNRESET→ Tag: Network. - If it sees

TimeoutError: waiting for selector→ Tag: Product/UI. - If it sees

No space left on device→ Tag: Infra.

This can be as simple as a Python script with a dictionary of patterns → tags. Over time, you can get fancy with ML/NLP, but most teams can start with rule-based tagging.

Step 3: Introduce a Scoring Rubric

Not all flakes are equal. Some fail rarely, some fail daily. Some block the main branch, some only annoy a single team.

That’s why you need a rubric that assigns a flake severity score. For example:

- Score 1 (Low): Fails less than 1% of runs. Annoying, but tolerable.

- Score 2 (Moderate): Fails 1–5% of runs. Worth tracking.

- Score 3 (High): Fails more than 5% of runs. Impacts velocity, requires isolation or fix.

Other factors you might include in the rubric:

- Frequency of failure

- Time lost to reruns

- Number of teams impacted

- Critical path relevance (does it block production deploys?)

The rubric should be transparent and repeatable; every flake gets a number, so you’re not arguing about whether it “feels” flaky.

Step 4: Decide on Actions

Once a test has a tag and a score, you can make structured decisions:

- Low-score flakes (1): Track in dashboard, leave in suite for now.

- Moderate flakes (2): Quarantine - move to an optional or nightly suite.

- High flakes (3): Isolate or de-automate - either fix immediately or remove from automation until root cause is resolved.

Here’s where many teams hesitate: is it ever OK to “de-automate” a test? The answer is yes. If a flaky test is slowing down 100 engineers every day, the opportunity cost is far higher than letting QA run it manually until it’s fixed.

The point is not to abandon coverage, but to stop letting a single unreliable test hold your pipeline hostage.

Step 5: Build Feedback Loops

Over time, this system should feed back into your engineering process.

- Dashboards: Show trending flaky categories and their scores.

- Ownership: Assign teams to categories (infra team handles infra flakes, QA handles harness flakes).

- Continuous improvement: Use the taxonomy to prioritize investments (e.g., “40% of our flakes are infra → we need better CI runners”).

This is where the system moves from reactive triage to proactive quality engineering.

A Day in the Life with Flake Scoring

Imagine this workflow:

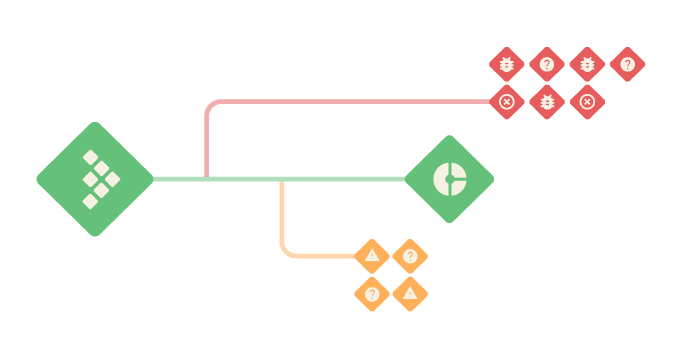

- A test fails in CI.

- The log parser scans the failure output, tags it as Network, assigns Score 2.

- The test run dashboard updates: “Network flakes: 3 failures in last 24 hours.”

- The CI pipeline automatically retries the test, but also files a ticket with the logs, tag, and score.

- The QA lead reviews the dashboard weekly and sees that “Network” is the biggest bucket of Score 2s.

- Action item: move those tests into a quarantined suite until network stability is improved.

Instead of endless Slack threads debating whether a failure “looked flaky,” the system provides evidence, tags, and guidance.

Benefits for QA and Dev Teams

- Faster triage. Engineers know the category instantly instead of manually reading logs.

- Higher trust in CI. Teams believe failures mean something.

- Better prioritization. Data-driven view of where flakes actually come from.

- Velocity restored. Pipelines run cleaner, teams ship faster.

And for QA teams specifically, this transforms their role: instead of being “the team that reruns tests,” they become the team that engineers the system of quality, building tooling that empowers everyone.

Getting Started Today

If you want to try this approach without a major investment, here’s how to start small:

- Pick 3–5 common failure patterns from your CI logs.

- Write a simple parser (Python, Node, whatever you use) that tags logs when those patterns appear.

- Start a spreadsheet or dashboard where each test gets a flake tag and score.

- Meet weekly to review the flake dashboard and decide actions.

- Expand taxonomy as new patterns emerge.

You don’t need ML. You don’t need a vendor tool. You just need a repeatable process to classify and score flakes. Over time, the taxonomy becomes an asset unique to your team, and the scoring rubric becomes a shared language for deciding what stays in CI and what gets quarantined.

Looking Ahead

Down the road, you could:

- Train ML models on your logs to predict flakiness.

- Automate quarantine/isolation directly from CI.

- Correlate flakes with code changes to spot risky areas of the codebase.

- Feed results into developer dashboards so engineers see flake risk in real-time.

But you don’t have to start there. Even the simplest version; regex tags + rubric scores; can cut triage time in half and give your team confidence that CI failures are worth investigating.

Flake will happen...

Flaky tests are inevitable. What matters is whether your team has the discipline to confront them systematically instead of living with the pain.

By building a tagging taxonomy, a parser, and a scoring rubric, you can transform flakes from a source of frustration into a source of insight. You’ll know which failures to fix, which to quarantine, and which to de-automate; and your CI will become faster, more trustworthy, and more valuable to the entire engineering org.

In the end, the goal is not to eliminate every flake forever. The goal is to make flaky tests visible, measurable, and actionable. Once you do that, you’ve taken one of the hardest parts of QA and turned it into a strength.

👉 Want more posts like this? Subscribe and get the next one straight to your inbox. Subscribe to the Blog or Follow me on LinkedIn

Comments ()